When you type a question into ChatGPT, it kind of feels like there’s a little genius living in your laptop, right? Ask anything, from “How do I get a job in AI?” to “Explain quantum physics,” and boom, an answer shows up in seconds.

But here’s the secret: there’s no mini-Einstein in there. ChatGPT isn’t thinking. It’s guessing. Really, really well.

The easiest way to picture it is autocomplete on your phone. You type “Let’s order…” and your phone suggests “pizza.” ChatGPT does the same thing, just on a much bigger scale. Instead of finishing a short text, it’s finishing entire paragraphs, predicting the next word over and over until it sounds like a full-blown explanation.

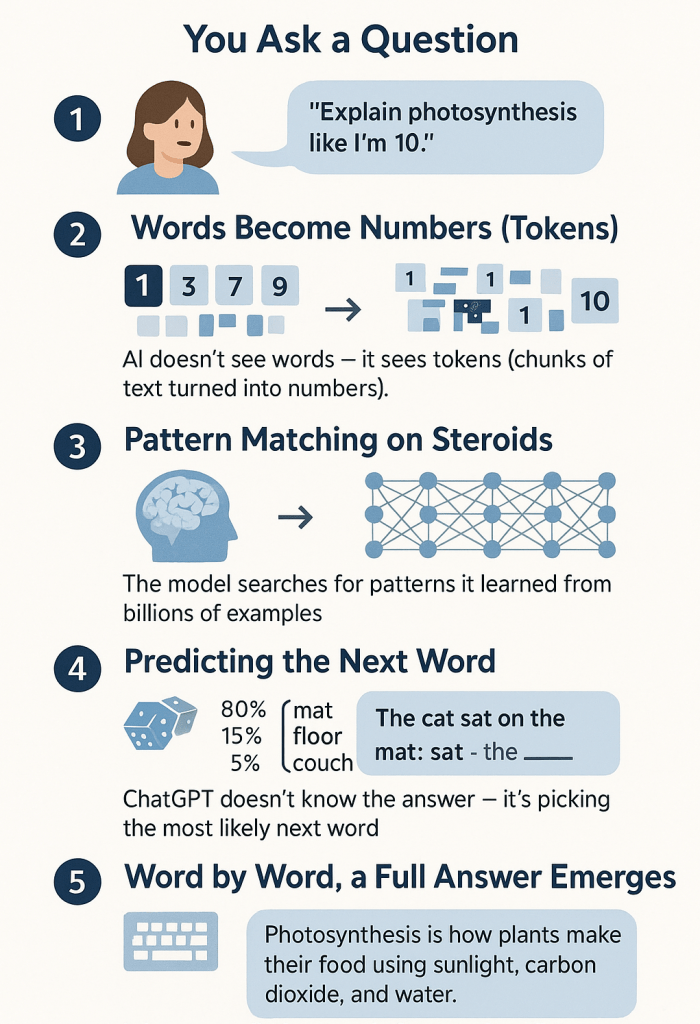

Here’s the play-by-play of what’s happening under the hood:

You ask a question.

The words get chopped into little pieces (called tokens) so the AI can process them.

Pattern party.

The model checks: “Have I seen something like this before? What words usually come next?”

One word at a time.

ChatGPT doesn’t know the whole answer in advance. It predicts one word, then the next, then the next… like filling in a giant Mad Libs.

No memory, no crystal ball.

It’s not searching a database or looking up facts. It’s generating them in real time. Which is why it sometimes nails it, and sometimes makes things up with way too much confidence.

But it can use context about you.

If you’re in the same conversation, ChatGPT remembers what you asked earlier and can tailor its answers. (Example: if you say you have a 6-year-old and later ask for dinner ideas, it might suggest something kid-friendly.) And if you’ve saved preferences like “keep responses short” or “I’m a marketer,” it can adjust to your style. It doesn’t “know” you like a person does, but it can respond like it remembers your vibe.

So yeah, ChatGPT isn’t secretly alive. It’s more like the world’s most articulate autocomplete.

And that’s the fun part: the reason it feels so smart isn’t because it thinks like us. It’s because we’re more predictable than we like to admit.